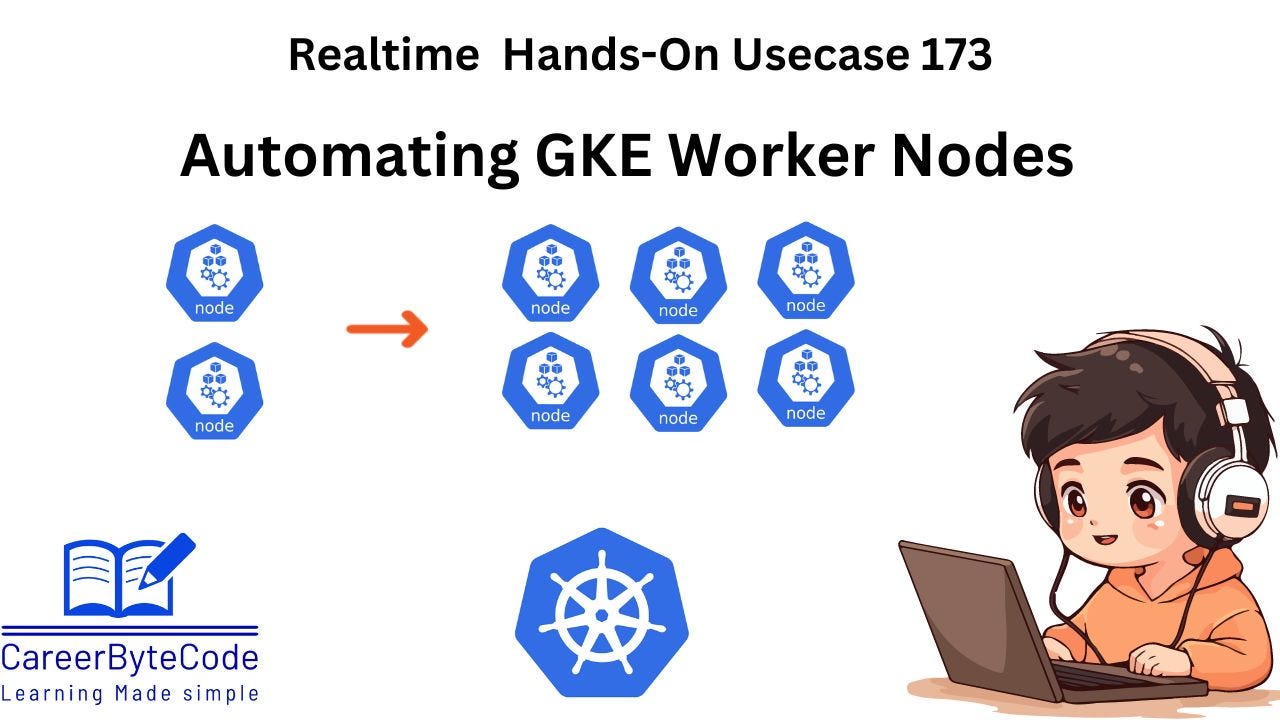

Respond to Traffic Spikes with Automatic GKE Node Scaling Automatically

In modern cloud-native environments, workloads can be highly dynamic, with fluctuations in user demand leading to varying resource requirements.

1. Why We Need This Use Case

In modern cloud-native environments, workloads can be highly dynamic, with fluctuations in user demand leading to varying resource requirements. Manually adjusting the number of worker nodes in a Kubernetes cluster to match these demands is inefficient and prone to human error. By implementing automatic scaling of worker nodes based on resource utilization, we can:

Optimize Resource Usage: Ensure that resources are available when needed and not wasted when demand is low.

Improve Performance: Maintain application responsiveness during peak usage times.

Reduce Operational Overhead: Minimize manual interventions for scaling operations.

Cost Efficiency: Pay only for the resources you actually need, scaling down during off-peak hours.

2. When We Need This Use Case

Variable Traffic Patterns: Applications experiencing unpredictable spikes or drops in user activity.

Cost Management: When looking to reduce cloud expenses by scaling down unused resources.

High Availability Requirements: Ensuring sufficient resources are available to handle peak loads without degradation.

Automated DevOps Processes: Incorporating infrastructure scaling into CI/CD pipelines.

Resource-Intensive Applications: Workloads that require significant resources during specific periods.

3. Challenge Questions (Scenario-Based)

Keep reading with a 7-day free trial

Subscribe to CareerByteCode’s Substack to keep reading this post and get 7 days of free access to the full post archives.