Understanding AZURE Blob Storage Tiers: Hot, Cool, and Archive Explained

Understand what AZURE lifecycle management is and why it matters for data durability and cost savings.

1. Problem Statement:

Azure Lifecycle Management – Automate Tiering (Hot → Cool → Archive)

Modern cloud applications generate and store massive amounts of data in Azure Blob Storage. While some of this data needs frequent access (Hot tier), a large portion becomes infrequently accessed over time (Cool) or must be retained for compliance and auditing (Archive). Manually identifying and moving blobs between access tiers is inefficient, error-prone, and costly. Without automation, organizations often face higher storage costs and compliance risks.

The challenge is to implement an automated lifecycle management policy that optimally transitions blobs from Hot to Cool to Archive based on defined criteria such as last modified date or naming patterns (prefix), while maintaining compliance and minimizing storage costs.

You are required to:

Configure a GPv2 storage account with lifecycle rules.

Define custom policies that automatically move blobs across access tiers.

Use Azure Portal or automation (CLI/ARM templates) to apply these policies.

Ensure that all blobs meet prerequisites (correct tiers, supported types, containers).

Validate that data moves as expected and complies with retention strategies.

This lab focuses on building and applying practical lifecycle management policies that enable organizations to manage data at scale in a cost-efficient, automated, and compliant manner.

2. Why We Need This Use Case

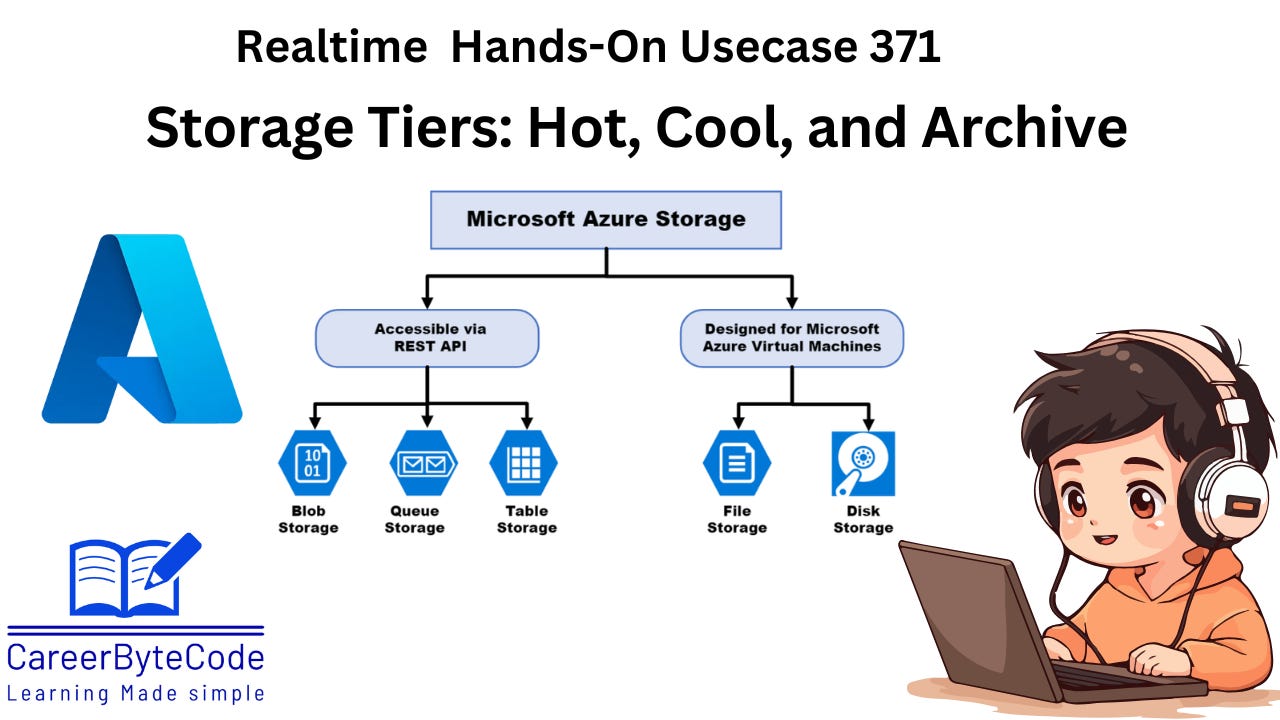

As organizations increasingly rely on cloud platforms for data storage, managing storage costs and performance becomes crucial. In Azure Blob Storage, data is stored in three primary tiers: Hot, Cool, and Archive—each optimized for different access patterns and cost models.

Without a lifecycle management strategy:

Frequently accessed (hot) data remains in expensive storage even when no longer needed.

Manual transitions between tiers are labor-intensive and error-prone.

Data required for compliance may be accidentally deleted or not archived properly.

This use case helps organizations automate the transition of blobs based on their age or last access/modification date. It allows:

Cost optimization by automatically moving older or less-used data to cheaper tiers.

Compliance enforcement by retaining data for fixed durations or archiving it securely.

Operational efficiency by eliminating manual data tiering and reducing the risk of human error.

3. When We Need This Use Case

You need this use case in the following scenarios:

Massive Data Accumulation: When applications, logging systems, or data pipelines are generating large amounts of blob data daily.

Cost Management: When you're looking to reduce Azure costs by automatically shifting less-used data from premium (Hot) to low-cost (Cool/Archive) tiers.

Data Lifecycle Policies: When you want to align storage with compliance requirements like retaining data for 7 years.

Data Analytics Pipelines: When historical data is accessed only occasionally but must be retained for audit or reporting.

Cold Storage Use Cases: When backups, logs, and archives must be retained securely without being frequently accessed.

4. Challenge Questions

Keep reading with a 7-day free trial

Subscribe to CareerByteCode’s Substack to keep reading this post and get 7 days of free access to the full post archives.